By Prasanna Veerapandi, Associate Lecturer & Consultant, Software Systems Practice, NUS-ISS

Have you ever wanted to create your own mobile applications or build your own machine learning algorithms? For the aspiring technologists, in this article and shared at NUS-ISS Learning Day 2019, TensorFlow Lite is discussed as an efficient set of tools to help developers run TensorFlow models on mobile in particular Android Devices.

Firstly, a Quick Draw dataset is used to train a model and deploy it as an Android App. The App will recognise the user drawn picture and predict the name of the object.

Diving right in:

STEPS:

|

Step 1

|

Data Preparation

|

|

Step 2

|

Model building

|

|

Step 3

|

Metrics & Evaluation

|

|

Step 4

|

Save & Export the model

|

|

Step 5

|

Load the model in Android App

|

DEMO APP:

1. Data Preparation

Quick Draw Dataset is a collection of 50 Million drawings across 300 plus categories, contributed by players of the game Quick Draw!. It is published by Google’s creative lab. Here we used a subset of that dataset which consists of 100 different balanced categories, such as a tshirt, table, chair, headphones, and more. The dataset is provided in numpy array formats, which can be downloaded from the public Google Object Store.

TIP: Instead of using the whole dataset for learning, it is important to split the dataset into training and testing set at a ratio of 80/20. The training dataset is used to train the model. Once the model is trained, evaluation metrics are calculated on the testing set. Also, it is recommended to randomise the training set, so that the model does not learn any sequence information.

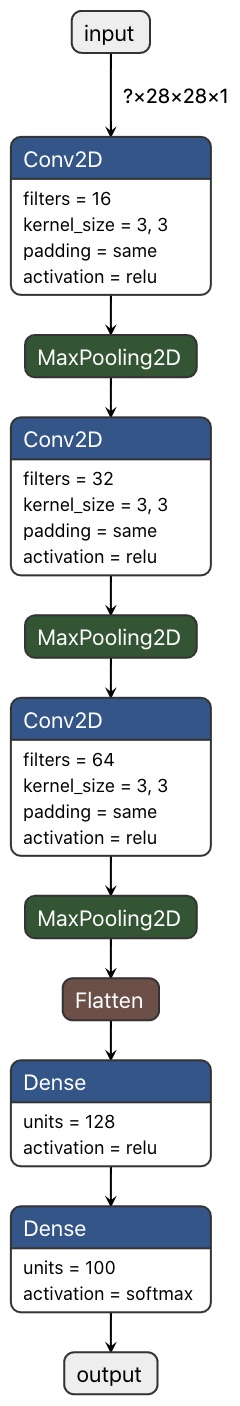

2. Model Building

A simplest model could be y= f(x), where f is the model, x is the input, y is the model output. A simple possible neural network could be y = g(f(x)), where g is a (non-linear) activation function and f is a linear function. Combine more of these non-linear(linear( inputs ) ) , then construct what is called a neural network. For example, if you stack multiples of them:

model = NL ( L ( NL ( L ( NL ( L(x) ) ) ) ) )

where NL is Non-Linear function and L is Linear function

Fig 1. CNN Architecture used for Quick-Draw Dataset

Here the Relu, MaxPooling are Nonlinear functions and Convolution2D is a Linear Function.

At last, fit the model with model.fit( x , y, epoch=10). Here epoch number defines how many times we are showing every input to the model. To make this function, the ideal function to map our x and y , we need to get the right set of weights, which can be obtained by using an Optimiser.

Moving forward, take some inputs and pass it to the function and observe its outputs. Once you have the output, measure how good the prediction is using a loss function. Now take an optimiser like SGD Optimizer or Adam Optimiser to minimise the loss function. By minimising the loss functions, one can arrive at the right set of weights for the ideal function that maps the x and y.

3. Metrics & Evaluation

For a classification problem like this, given an image and predict what category this object belongs to, use Confusion Matrix to measure the accuracy. Also, it is more easy to quantify the accuracy metric using precision, recall based on Confusion Matrix.

score = model.evaluate(x_test , y_test)

For this small subset of classes (100), with this simple Convolutional Neural Network (CNN) architecture, about 93% train, and test accuracy is achieved.

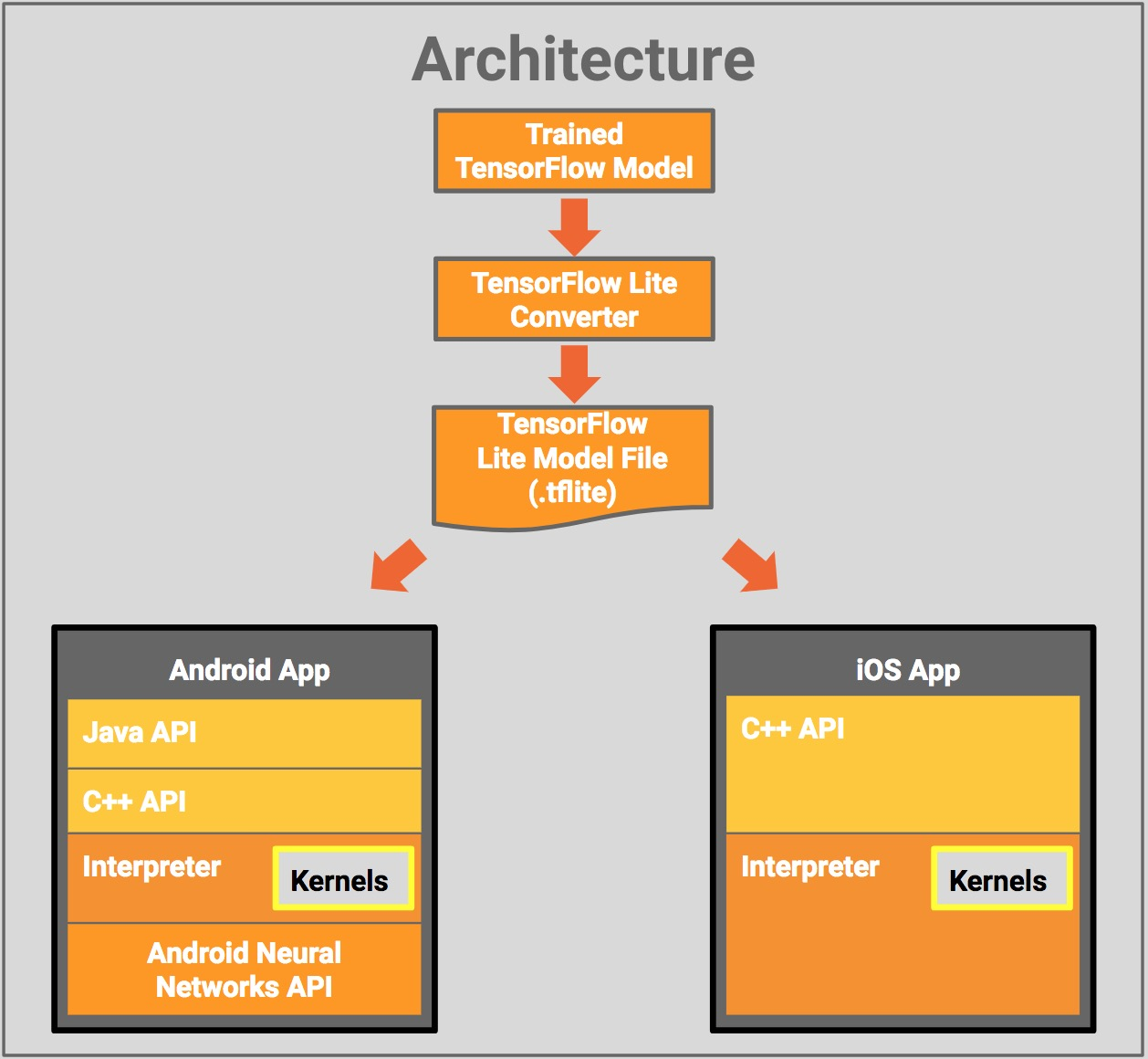

4. Save & Export the Model

It is time to export the tensorflow model (Arch + Weights ) file into a file format which can be loaded into the Android APP. Android uses tflite format for neural network model. To convert tensorflow keras (.h5) file to (.tflite) format, use a TfLiteConverter module:

converter = tf.lite.TFLiteConverter.from_keras_model_file( model.h5 )

tflite_model = converter.convert()

The exported model size is 3mb in size.

5. Load the model in Android App

Now, copy the tflite model file into Android asset folder. Do not forget to copy the labels file, which defines mapping for the model outputs. If the model outputs shows numbers like 0,1, refer to the labels file to get the object/category name like; 0 - car, 1 - chair

In Android, use the tensorflow Interpreter (org.tensorflow.lite.Interpreter) to load the model and run the model for new inputs. Use the following to do forward propagation for the new input to get results:

mInterpreter.run(newInput, result)

A user can draw on the screen, and click the predict button, then the drawing is converted into an image and fed into the model interpreter to get the result. Finally, lookup in the labels dictionary for the result info and the corresponding label will be shown on the screen, in less than 0.05 milliseconds.

In summary, what stands out positively is that the model runs locally in the android phone. Typically for high performance Machine Learning models, it is deployed in a server and mobile apps consume them in the form of APIs. However in general, it is slower which might take a few seconds for the request response lifecycle.

Even though running Machine Learning Models locally in an android phone is faster, the machine is not learning anything (simply doing prediction) after being deployed in the phone. For continuous learning, mobile devices do not have sufficient power to train or might take a very long time to train on device. For that, some form of feedback loop in which the users verifies the model outputs and give feedback is still needed, collected and sent back to the server for continuous learning. Always download the latest model from the server to improve the performance of the model over time.

Discover the demo app with detailed codes

here.

Click here to find out more on StackUp - Startup Tech Talent Development courses we offer.